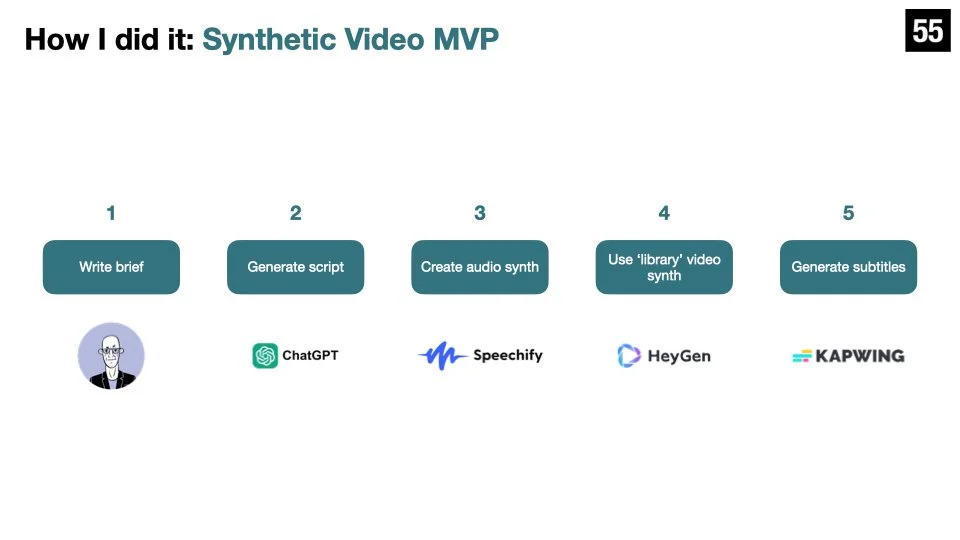

Quick MVP of synthetic audio & video content

I’ve been wanting to try this for a while, ever since I got my HeyGen account activated last year, and am very excited to finally have time to conduct and share my experiment. I was originally planning to try it out with a custom avatar based on my own image, but I decided to see how far I could get using free tools only. In some ways, that proved to be an even more fascinating journey.

Step 1 - Script

First stop was identifying a topic - in this case, I chose the work that 55BirchStreet is doing with generative AI for our clients. I fed in a prompt to ChatGPT explaining what I wanted, and got a pretty decent script back straight away.

Step 2 - Synth Audio

Next, I went to Speechify, uploaded a minute of my voice (reading an FT article), uploaded the ChatGPT script, and then played back the free synthetic audio of “me” reading it. The free version actually wasn’t that impressive, so I paid for a one month subscription to get the premium version of the synth - which is ‘night and day’ better - really impressive.

Step 3 - Subtitles

Now I had a great audio synth, but I wanted to make it easier to follow. So I transferred audio to video with Online Converter’, which gave me a video with no image - just the synth of my voice reading the ChatGPT script. I uploaded this video to Kapwing, to get automated subtitles added, which you can do for free if you can live with the Kapwing watermark.

Step 4 - Synth Video

I didn’t go as far as creating a personalised video avatar, because I wanted to limit the cost of the experiment. So instead I took one of the ‘library’ video AI avatars from HeyGen. It’s very surreal watching a video synth of a pretty realistic avatar of someone else, saying words in your voice, that you have never actually spoken or written.

Key Learnings

It took a while for me to put the exact workflow together. Although I knew all the technology existed, I wasn’t specifically aware of Kapwing or Speechify or Online Converter - I just knew that HeyGen would be my final destination. I’m super impressed with the result.

Potential Use Cases

The quality of both the video synth and the Chat GPT script is good enough that a bunch of use cases are immediately apparent. Two categories spring to mind:

- Content already in video form that needs to be updated frequently (eg employee or user training videos, updated every time there is a change to a process, and even personalised to different roles departments).

- Content that would normally be too expensive to produce at scale (eg weekly style advice videos, for eCommerce fashion retailers, personalised for every customer).

Overall this was a really fun, very instructive experiment. It’s honestly the kind of thing that anyone can do in one evening with a ChatGPT Plus account and 20 euros to spend on a one month audio/video subscription. Even if it’s a while before your company implements anything like this at scale, it’s really good to get the hands-on experience and understanding now.