How good is Deep Seek?

Deep Seek, the new Chinese LLM, is impressive. But don't jump ship from your preferred AI tools and models just yet - here's why.

Yes, Deep Seek is impressive, especially considering it cost far less than conventional models (DeepSeek V3 was trained in around two months at a cost of US$5.58 million compared to an estimated $41 million to $78 million for ChatGPT 4.)

But in this experiment while it gave a functional response, it was not fully usable, nor as nuanced as ChatGPT 4o or o1.

The experiment involved determining potential customer profiles for an internal marketing programme that helps companies drive up adoption of generative AI amongst employees: "AI Embrace".

Prompt 1:

"Can you give me some suggestions for companies in Berlin or Hamburg, that might be struggling to get enough adoption of generative AI amongst their employees - and that would benefit significantly if their adoption increased."

Prompt 2:

"Yes, great thank you. What kind of role do you think the attached offer would appeal to?*"

(*I uploaded a pdf with details of “AI EMBRACE” - hit me up on LinkedIn if you’d like to see more about this programme.)

Prompt 3:

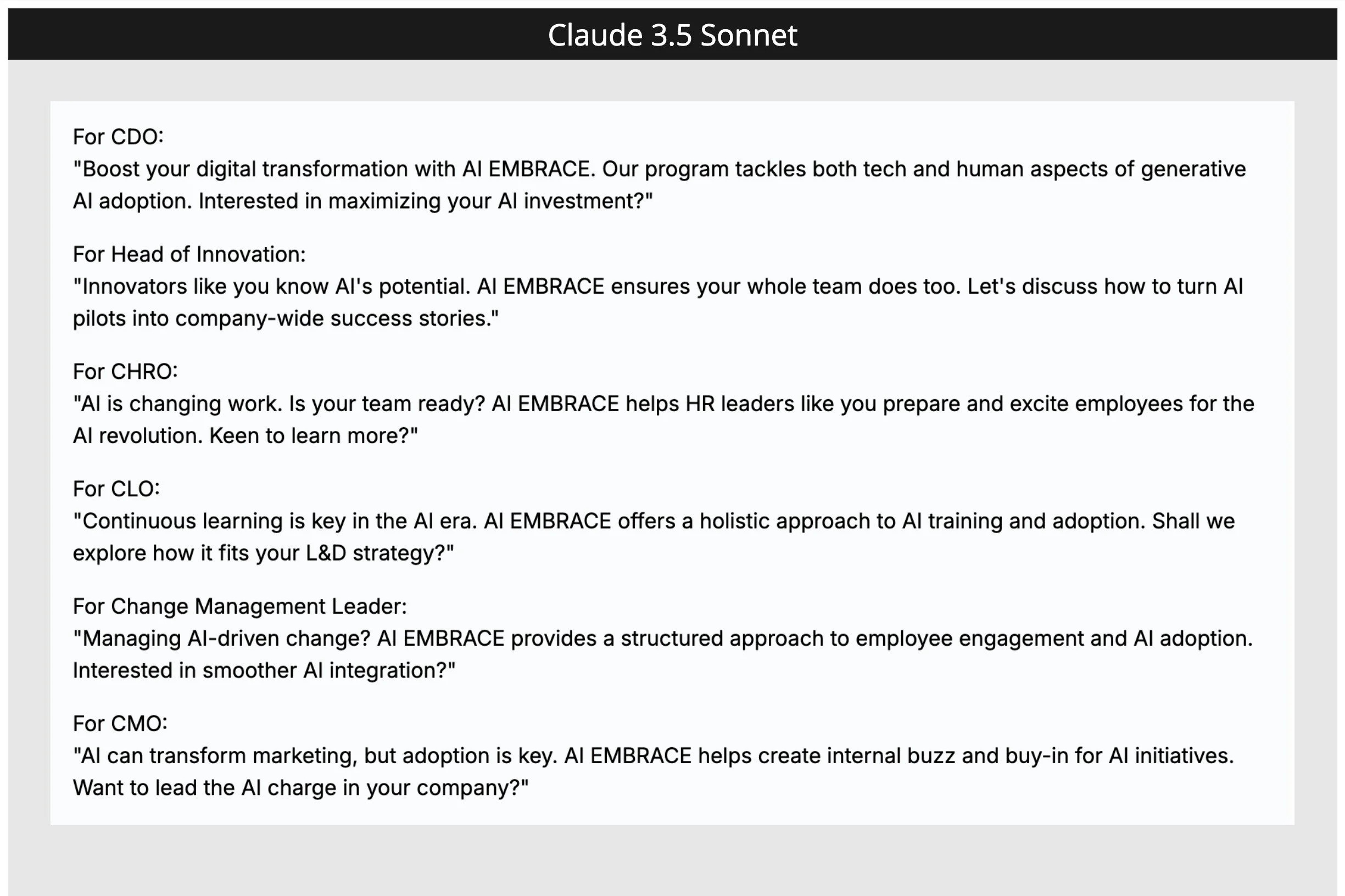

"Could you write some very, very short text for a LinkedIn direct message, as outreach about this programme to people in those roles."

Below you can see the results for Deep Seek, ChatGPT4o / o1, Claude Sonnet 3.5 and the 2.0 ‘experimental advanced’ version of Gemini. Honestly none of them is perfect.

And while it’s evident that Deep Seek is closely modeled on ChatGPT 4o, (you can see that in the structure), I think the tone is off – and even though it’s much cheaper than ChatGPT I wouldn’t swap it out in any workflow involving copywriting or strategic topics.

Which raises a very important point - the model you choose depends on the tasks you want to complete - and different models have different strengths. In Navar.ai we tested several models and chose to work with ChatGPT4o and Claude Sonnet 3.5, because for the workflows we tackle these give us the overall best performance.

I suggest working with Hugging Chat or Poe, which let you test out different models for the same task, so you can make an informed decision. None of this is easy, it takes (human) time and thought, and needs to be repeated regularly as models evolve. (Maybe one day this can all be automated based on your requirements and preferences, with automatic switching recommendations prepared on a schedule for you to approve - but I don’t think this exists right now unless you’re building this into your own bespoke tools.)

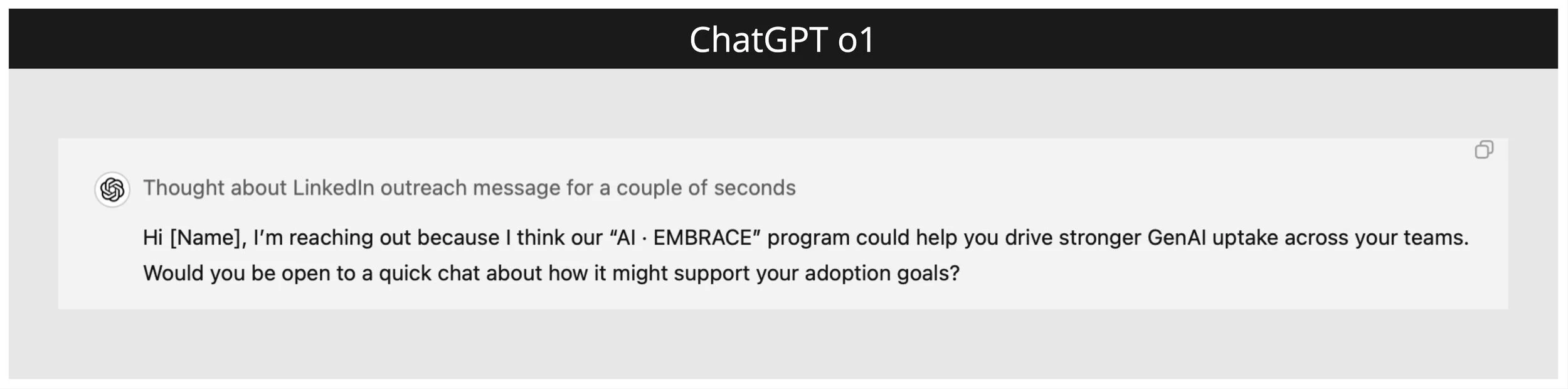

The final step was the most insightful. ChatGPT o1’s simple and concise response impressed me the most, so I onboarded it as a collaborator for the rest of the experiment. I shared all of the responses, and gave it the following prompt:

“Hey. I'm working on some text for an outreach message for LinkedIn. It's to promote this programme. Several different LLMs have given me text, if I share their work can you please evaluate and let me know which one you recommend and why. (Feel free to adapt any of them and make improvements, to create the perfect text.)”

Here’s the final suggestion from ChatGPT o1, which I think is actually very usable:

“Below is a “best of all worlds” sample that’s short enough for LinkedIn, but also role‐sensitive and highlights the program’s uniqueness. Feel free to adapt tone or length:

Subject (if applicable): Accelerate AI Adoption with “AI EMBRACE”

Message:

“Hi [Name], I noticed your role in [Function/Department]. We’ve been helping teams accelerate AI adoption through our ‘AI EMBRACE’ program—covering both the tech and human side of change.

Would a quick chat help you see how it might boost [specific goal: L&D results / digital transformation / marketing ROI]? Happy to share a brief overview if you’re interested.”

Why it works:

• Personalization: References their role or focus.

• Unique Value: States “both the tech and human side” to set your program apart.

• Brevity: Short enough for LinkedIn; optional extra details can follow once they engage.

• Clear CTA: Direct invitation to chat or receive an overview.”

This basically sums up where we are right now in this incredible and overwhelming AI journey – it’s possible to get amazing results, in an impressively short space of time – but it takes time and skill to get the most out of LLMs in their current incarnations. (And even sophisticated models with advanced reasoning like o1 still benefit from a collaborative, multi-step approach to prompting.)

A whole set of AI ‘vertically oriented’ tools are springing up now, each dedicated to specific use cases (sales, HR, customer services) – and these promise to save individual users from the need to understand LLMs, model strengths, prompting techniques or workflows – because that’s all internalised by the models.

Personally, I still think there’s a huge value in making your own AI hypotheses and experiments, even if you’re not working in the technology field – it will help you have informed discussions with AI experts, and even guide them to understand model capabilities for your specific use cases.

The explosion in tools and model capabilities is actually leading to the creation of completely new roles, such as GTM Engineers, and potentially soon HR Ops Engineers – which I’m going to make the subject of another post very soon.